RELATED RESEARCH: NIST Technical Note 1779: General Guide on Emergency Communications Strategies for Buildings, February 2013

Category Archives: @NIST

- Home

- Archive by category "@NIST"

AI Risk Management Framework

- Evaluating LLMs for Real-World Vulnerability Repair in C/C++ Code

NIST conducted a study to evaluate the capability of advanced LLMs, such as ChatGPT-4 and Claude, in repairing memory corruption vulnerabilities in real-world C/C++ code. The project curated 223 code snippets with vulnerabilities like memory leaks and buffer errors, assessing LLMs’ proficiency in generating localized fixes. This work highlights LLMs’ potential in automated code repair and identifies limitations in handling complex vulnerabilities.

- Translating Natural Language Specifications into Access Control Policies

This project explores the use of LLMs for automated translation and information extraction of access control policies from natural language sources. By leveraging prompt engineering techniques, NIST demonstrated improved efficiency and accuracy in converting human-readable requirements into machine-interpretable policies, advancing automation in security systems.

- Assessing Risks and Impacts of AI (ARIA) Program

NIST’s ARIA program evaluates the societal risks and impacts of AI systems, including LLMs, in realistic settings. The program includes a testing, evaluation, validation, and verification (TEVV) framework to understand LLM capabilities, such as controlled access to privileged information, and their broader societal effects. This initiative aims to establish guidelines for safe AI deployment.

- AI Risk Management Framework (AI RMF)

NIST developed the AI RMF to guide the responsible use of AI, including LLMs. This framework provides a structured approach to managing risks associated with AI systems, offering tools and benchmarks for governance, risk assessment, and operationalizing trustworthy AI across various sectors. It’s widely applied in LLM-related projects.

- AI Standards “Zero Drafts” Pilot Project

Launched to accelerate AI innovation, this project focuses on developing AI standards, including those relevant to LLMs, through an open and collaborative process. It aims to create flexible guidelines that evolve with LLM advancements, encouraging input from stakeholders to ensure robust standards.

- Technical Language Processing (TLP) Tutorial

NIST collaborated on a TLP tutorial at the 15th Annual Conference of the Prognostics and Health Management Society to foster awareness and education on processing large volumes of text using machine learning, including LLMs. The project explored how LLMs can assist in content analysis and topic modeling for research and engineering applications.

- Evaluation of LLM Security Against Data Extraction Attacks

NIST investigated vulnerabilities in LLMs, such as training data extraction attacks, using the example of GPT-2 (a predecessor to modern LLMs). This project, referencing techniques developed by Carlini et al., aims to understand and mitigate privacy risks in LLMs, contributing to safer model deployment.

- Fundamental Research on AI Measurements

As part of NIST’s AI portfolio, this project conducts fundamental research to establish scientific foundations for measuring LLM performance, risks, and interactions. It includes developing evaluation metrics, benchmarks, and standards to ensure LLMs are reliable and trustworthy in diverse applications.

- Adversarial Machine Learning (AML) Taxonomy for LLMs

NIST developed a taxonomy of adversarial machine learning attacks, including those targeting LLMs, such as evasion, data poisoning, privacy, and abuse attacks. This project standardizes terminology and provides guidance to enhance LLM security against malicious manipulations, benefiting both cybersecurity and AI communities.

- Use-Inspired AI Research for LLM Applications

NIST’s AI portfolio includes use-inspired research to advance LLM applications across government agencies and industries. This project develops guidelines and tools to operationalize LLMs responsibly, focusing on practical implementations like text summarization, translation, and question-answering systems.

Remarks:

- These projects reflect NIST’s focus on evaluating, standardizing, and securing LLMs rather than developing LLMs themselves. NIST’s role is to provide frameworks, guidelines, and evaluations to ensure trustworthy AI.

- Some projects, like ARIA and AI RMF, are broad programs that encompass LLMs among other AI systems, but they include specific LLM-related evaluations or applications.

Standards Curricula Program

NIST Standards Coordination Office Curricula Development Cooperative Agreement Program.

How to Apply | Awardees 2012-2025 | News Items

NIST continues its Standards Curriculum program through the Standards Coordination Office Curricula Development Cooperative Agreement Program (SCO CD CAP), formerly known as the Standards Services Curricula Development Cooperative Agreement Program. This ongoing initiative, started in 2012 (initially as Education Challenge Grants), funds U.S. colleges and universities to develop and integrate undergraduate and/or graduate-level curricula on documentary standards, standards development, and standardization into courses, modules, seminars, and learning resources. The University of Michigan is a past recipient of a standards education award through this program.

The most recent funding round was for Fiscal Year 2025 (FY25):

- The Notice of Federal Funding Opportunity (NOFO) was released on January 14, 2025.

- Applications were due by April 14, 2025.

- NIST anticipated awarding up to 8 grants, each up to $100,000, with project periods of up to 3 years (potentially extending into 2027–2028).

Projects funded under FY25 involve curriculum development and implementation that may continue into 2026 and beyond, including required workshops.

As of early 2026, no new Notice of Federal Funding Opportunity (NOFO) has been announced for FY2026. The program has historically issued funding rounds annually or near-annually, with recent awards in prior years (e.g., 2024 awards totaling over $1.1 million to 8 universities). However, due to proposed budget reductions for NIST in FY2026, future rounds may be impacted or delayed.

2024 Update: NIST Awards Funding to 8 Universities to Advance Standards Education

The Standards Coordination Office of the National Institute of Standards and Technology conducts standards-related programs, and provides knowledge and services that strengthen the U.S. economy and improve the quality of life. Its goal is to equip U.S. industry with the standards-related tools and information necessary to effectively compete in the global marketplace.

Every year it awards grants to colleges and universities through its Standards Services Curricula Cooperative Agreement Program to provide financial assistance to support curriculum development for the undergraduate and/or graduate level. These cooperative agreements support the integration of standards and standardization information and content into seminars, courses, and learning resources. The recipients will work with NIST to strengthen education and learning about standards and standardization.

The 2019 grant cycle will require application submissions before April 30, 2019 (contingent upon normal operation of the Department of Commerce). Specifics about the deadline will be posted on the NIST and ANSI websites. We will pass on those specifics as soon as they are known.

The winners of the 2018 grant cycle are Bowling Green State University, Michigan State University, Oklahoma State University, and Texas A&M University. (Click here)

The University of Michigan received an award during last year’s grant cycle (2017). An overview of the curriculum — human factors in automotive standards — is linked below:

NIST Standards Curricula INTRO Presentation _ University of Michigan Paul Green

Information about applying for the next grant cycle is available at this link (Click here) and also by communicating with Ms. Mary Jo DiBernardo (301-975-5503; maryjo.dibernardo@nist.gov)

LEARN MORE:

Click here for link to the previous year announcement.

Time & Frequency Services

The National Institute of Standards and Technology is responsible for maintaining and disseminating official time in the United States. While NIST does not have a direct role in implementing clock changes for daylight saving time, it does play an important role in ensuring that timekeeping systems across the country are accurate and consistent.

Prior to the implementation of daylight saving time, NIST issues public announcements reminding individuals and organizations to adjust their clocks accordingly. NIST also provides resources to help people synchronize their clocks, such as the time.gov website and the NIST radio station WWV.

In addition, NIST is responsible for developing and maintaining the atomic clocks that are used to define Coordinated Universal Time (UTC), the international standard for timekeeping. UTC is used as the basis for all civil time in the United States, and it is the reference time used by many systems, including the Global Positioning System (GPS) and the internet.

Overall, while NIST does not have a direct role in implementing clock changes for daylight saving time, it plays an important role in ensuring that timekeeping systems across the country are accurate and consistent, which is essential for the smooth implementation of any changes to the system.

More

Time Realization and Distribution

Artificial Intelligence Standards

U.S. Artificial Intelligence Safety Institute

The White House: ENSURING A NATIONAL POLICY FRAMEWORK FOR ARTIFICIAL INTELLIGENCE

STDMi: OMB A119 & the NTAA: How ANSI accredited standards become federal law

ANSI Response to NIST “A Plan for Global Engagement on AI Standards”

On April 29, 2024 NIST released a draft plan for global engagement on AI standards.

Comments are due by June 2. More information is available here.

Request for Information Related to NIST’s Assignments

Under Sections 4.1, 4.5 and 11 of the Executive Order Concerning Artificial Intelligence

The National Institute of Standards and Technology seeks information to assist in carrying out several of its responsibilities under the Executive order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence issued on October 30, 2023. Among other things, the E.O. directs NIST to undertake an initiative for evaluating and auditing capabilities relating to Artificial Intelligence (AI) technologies and to develop a variety of guidelines, including for conducting AI red-teaming tests to enable deployment of safe, secure, and trustworthy systems.

Regulations.GOV Filing: NIST-2023-0009-0001_content

Browse Posted Comments (72 as of February 2, 2024 | 12:00 EST)

Standards Michigan Public Comment

Did you know? If you’ve seen clocks advertised to consumers as “atomic clocks,” those are actually listening to NIST radio stations’ time signals so they can count the seconds accurately. pic.twitter.com/hTTO0smikl

— National Institute of Standards and Technology (@NIST) January 31, 2024

Standard Reference Material

Metrology is the scientific discipline that deals with measurement, including both the theoretical and practical aspects of measurement. It is a broad field that encompasses many different areas, including length, mass, time, temperature, and electrical and optical measurements. The goal of metrology is to establish a system of measurement that is accurate, reliable, and consistent. This involves the development of standards and calibration methods that enable precise and traceable measurements to be made.

The International System of Units is the most widely used system of units today and is based on a set of seven base units, which are defined in terms of physical constants or other fundamental quantities. Another important aspect of metrology is the development and use of measurement instruments and techniques. These instruments and techniques must be designed to minimize errors and uncertainties in measurements, and they must be calibrated against recognized standards to ensure accuracy and traceability.

Metrology also involves the development of statistical methods for analyzing and interpreting measurement data. These methods are used to quantify the uncertainty associated with measurement results and to determine the reliability of those results.

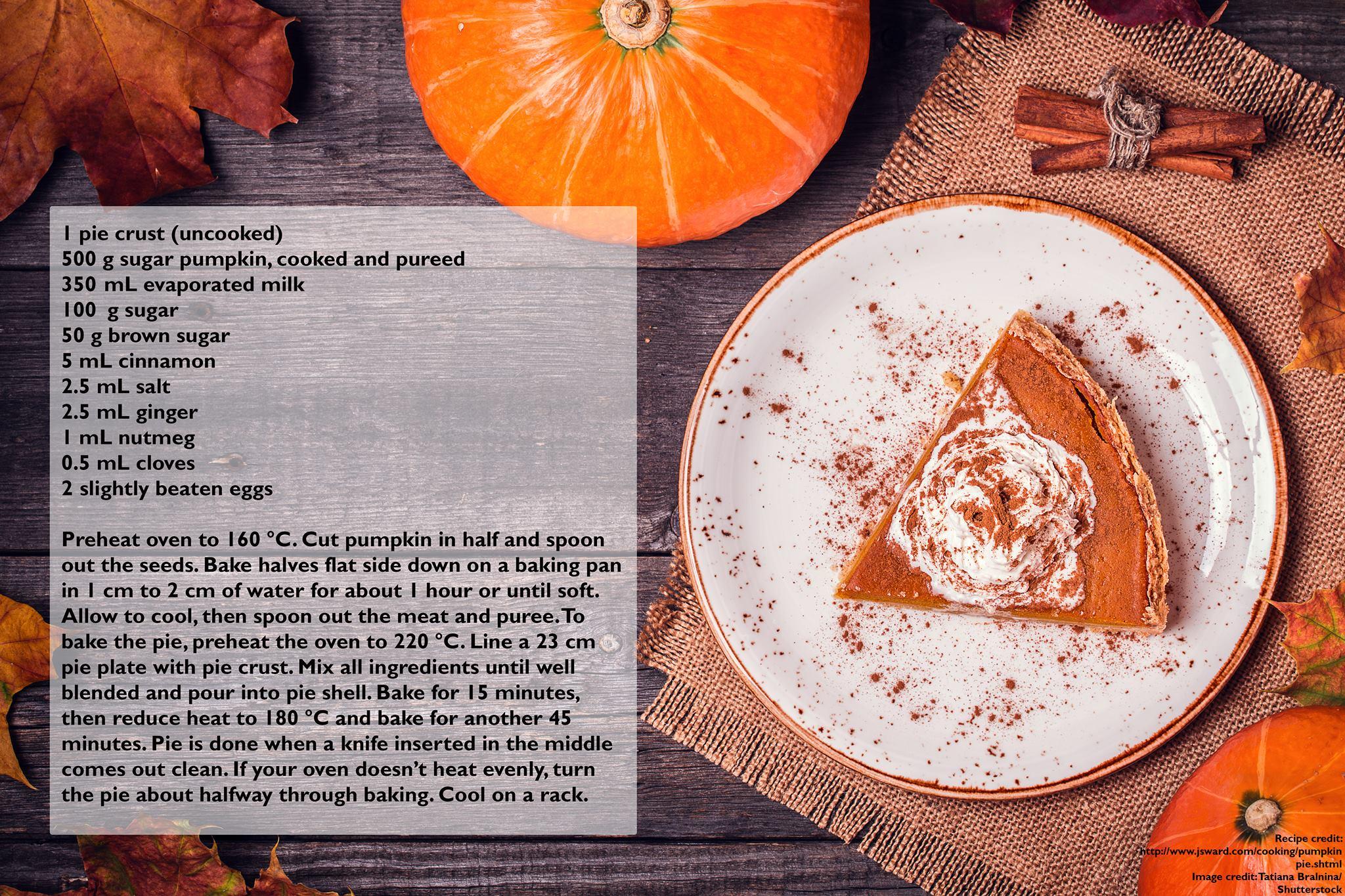

Pumpkin Pie

“There is no love sincerer than the love of food.”

– George Bernard Shaw

Related:

New update alert! The 2022 update to the Trademark Assignment Dataset is now available online. Find 1.29 million trademark assignments, involving 2.28 million unique trademark properties issued by the USPTO between March 1952 and January 2023: https://t.co/njrDAbSpwB pic.twitter.com/GkAXrHoQ9T

— USPTO (@uspto) July 13, 2023

Standards Michigan Group, LLC

2723 South State Street | Suite 150

Ann Arbor, MI 48104 USA

888-746-3670